You can use the Google Indexing API to notify Google about new or updated website pages in real-time. This ensures that fresh content is visible in search results. Indexing API can be consumed via plugins, SDKs, or specialized Google Indexing applications.

To get started with the Google Indexing API, you must follow a series of technical steps. They involve setting up a project in the Google Cloud Console, enabling APIs, and creating credentials.

Setting up the API can be technical, but it’s a rewarding process. Webmasters can control how their content is found and served in search results by implementing the API correctly.

Google’s Indexing API plays a pivotal role in how content is introduced to Google Search. It provides webmasters and developers with a direct line of communication to signal changes on their websites.

Indexing is a fundamental process that search engines like Google use to collect and store data from web pages to display in search results. When a page is indexed, it’s included in Google’s vast library of content, allowing it to appear to users when they search for relevant terms. Traditionally, Google discovers content by crawling the web and indexing pages as it goes.

The Google Indexing API has one distinct function: to allow site owners to notify Google promptly when pages are created or removed. It directly affects the indexation of URLs, enabling a more efficient interaction with Google’s search ecosystem. The API is especially useful for websites with a lot of changing content. It bypasses the standard waiting period for crawl-based indexing. Site owners can use the Google Search Console and Google Indexing API to manage index updates and track the status of their pages.

Integrating the Indexing API requires setting up a service account through the Google Cloud Platform. Then, one must verify ownership of their website in the Google Search Console. Proper implementation allows for faster content updates in the search results. This can be a significant advantage in SEO.

When using Google’s Indexing API, developers need to do some setup tasks. These include creating a Google Cloud Platform project, enabling the API, verifying website ownership, and creating a service account.

If prefer video over text, check out this video:

You can use our onboarding procedure for Connecting a GCP project.

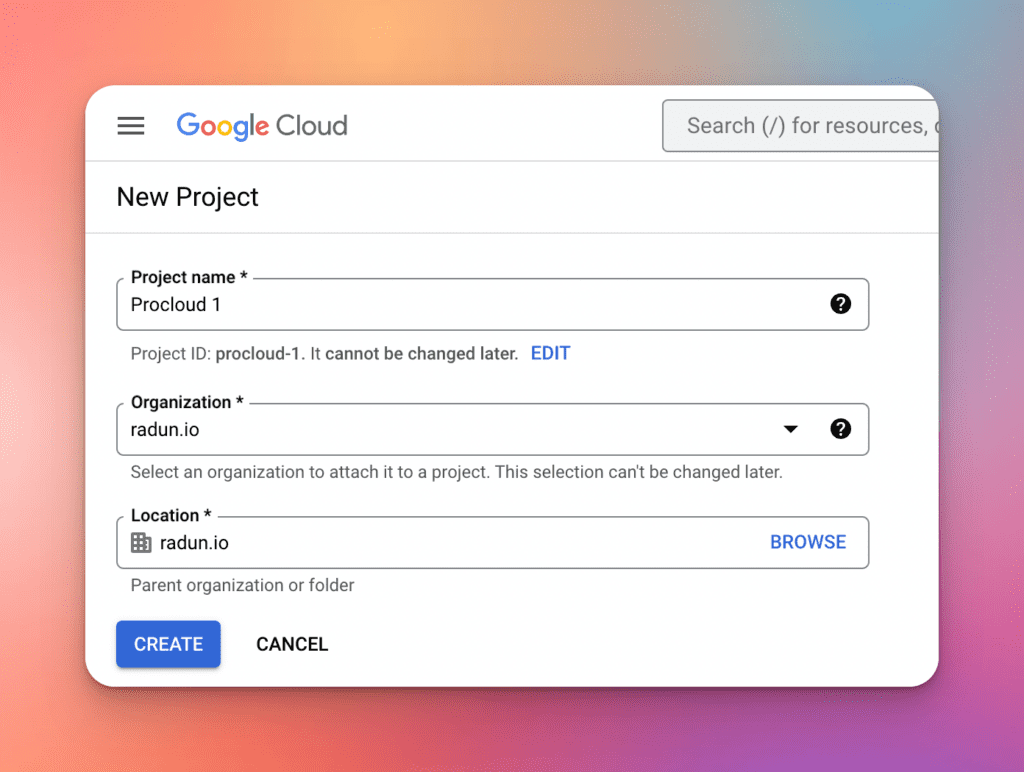

Visit https://console.cloud.google.com/projectcreate page and choose a name for the new project. The best option for a name is to use a suffix for every name with a number to differentiate projects later. You’re free to name them whatever you want.

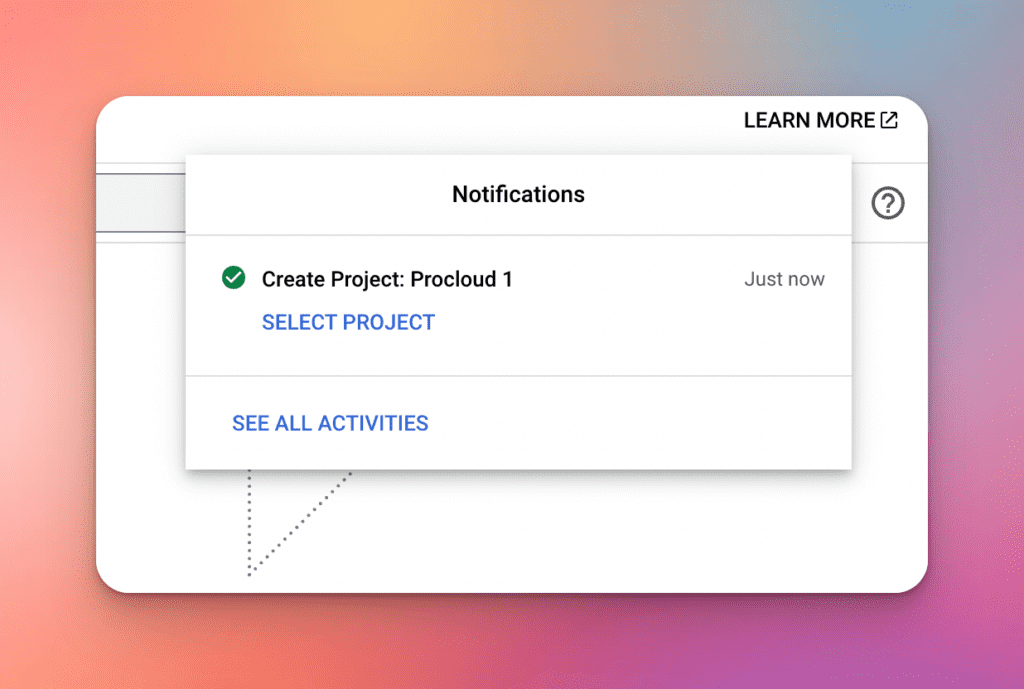

After a few seconds, GCP will create a new project, and you’ll see a notification in the top-right corner.

Select the new project.

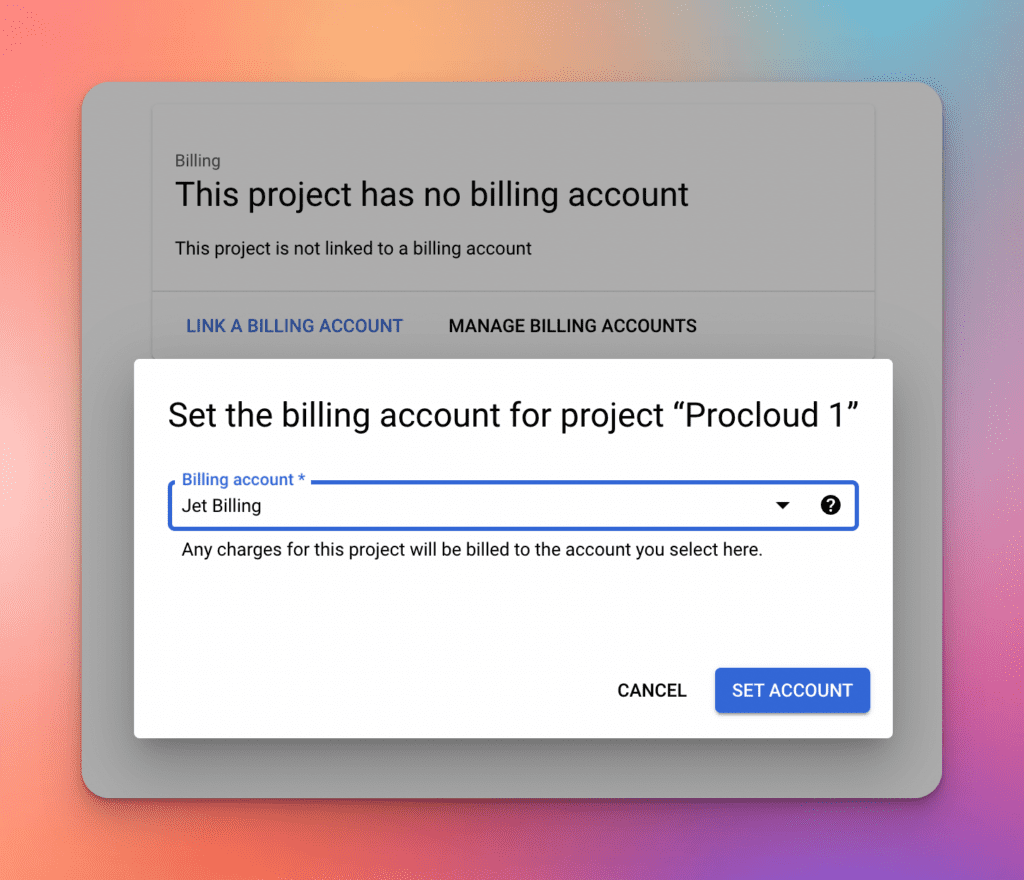

Go into the sidebar and choose the Billing page.

There, you need to create or connect an existing billing account.

To enable Google Indexing API GCP requires a connected billing account to prevent any abuse of their services.

After the billing account is connected, we can proceed to enable APIs.

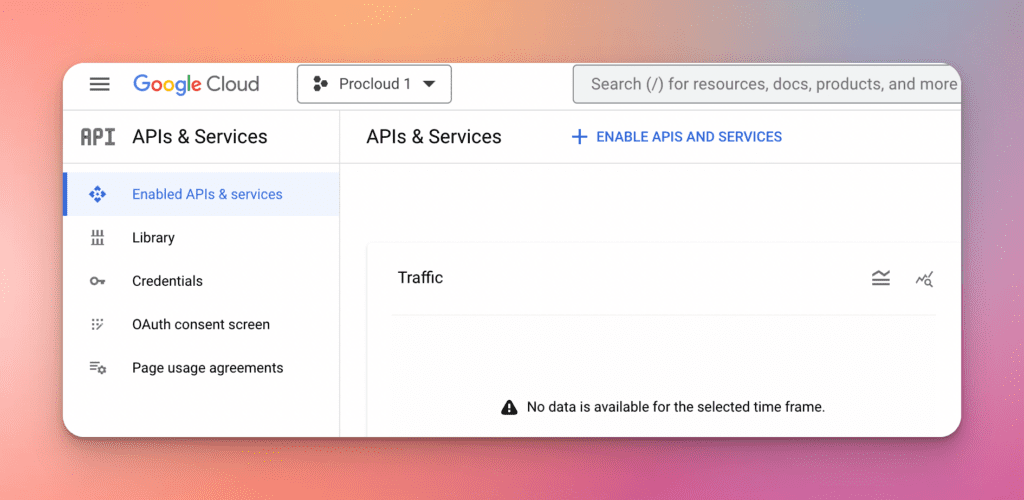

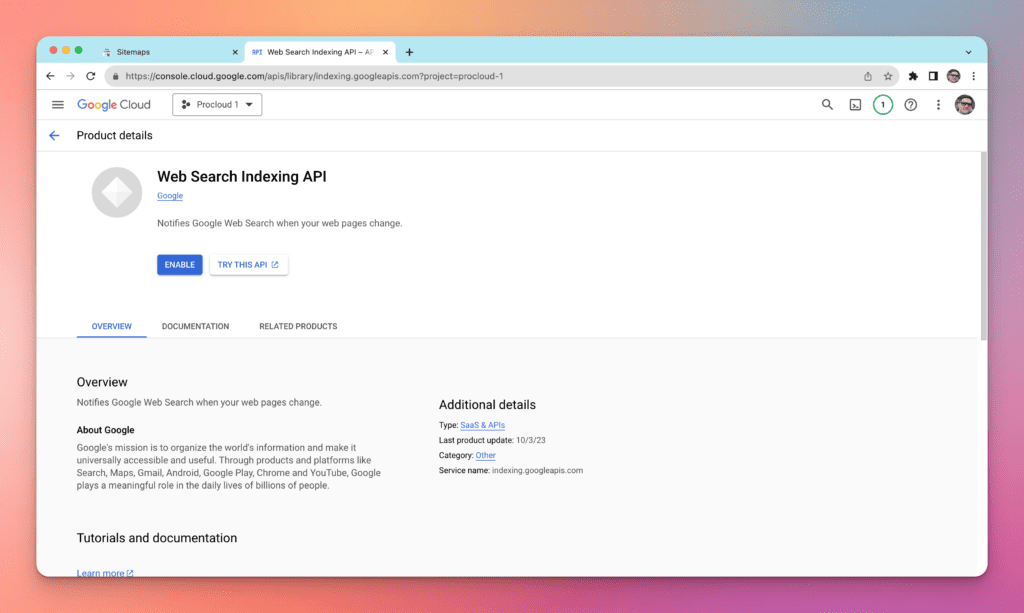

Go into APIs & Services and click the Enable APIs and Services button.

Search for Web Search Indexing API, open it, and click the Enable button.

The next step is the verification process. This ensures that the user has the necessary permissions to interact with the website’s content on search engines. Website owners need to verify their site in Google Search Console. After verification, users are required to associate their website with the API project.

Finally, it is necessary to establish a service account. This grants the Indexing API project the permission to perform actions on the user’s behalf. To do so, the creator must:

The implementation of the Google Indexing API involves a clear sequence of steps. It also requires precise URL submissions and appropriate error handling. By following these protocols, developers can effectively communicate the state of web documents to Google.

Once the setup is complete, developers can submit URLs using the API. It’s crucial to ensure that the URLs are accurate and comply with Google’s guidelines. This can be done programmatically by making POST requests to the Indexing API, including the URL in the request body. The Search Console plays a pivotal role in this process. Developers can track the status of submitted URLs through the console’s interface.

Proper error handling is vital for maintaining the integrity of the submission process. Google Indexing API may return errors for various reasons, such as access issues or limitations on the number of URLs. Developers should monitor their API usage through the console tab to prevent hitting quotas. They should also handle errors gracefully. For example, they can implement retry logic with exponential backoff.

Effective indexing is crucial for ensuring that a website’s content is discoverable by search engines. Adhering to best practices can significantly enhance the probability of having pages indexed quickly and accurately.

Content must be both accessible to search engines and valuable to users to guarantee efficient indexing. This involves using clear, descriptive titles and headings (H1, H2, H3). Ensure all content is original and offers substantial information. Keywords should be used judiciously to match user search intent without compromising readability—keyword stuffing is to be avoided.

A sitemap plays an instrumental role in indexing. It outlines the structure of a site, enabling search engines to crawl it more effectively. It should be:

Maintaining an up-to-date sitemap can help search engines find new pages quickly.

The Intant Indexing Application can expedite the indexing of new content on a website. It sends updates directly to Google via the Indexing API. This is particularly efficient for sites with rapidly changing content, like job listings or event broadcasting services. This application integrates seamlessly with the website’s infrastructure, providing it:

Using the Jetindexer – Instant Indexing Application can provide significant advantages. It helps keep a website’s content freshly indexed by search engines.

Effective management of the indexing process is crucial. It ensures that a website’s URLs are tracked and indexed properly. This allows webmasters to understand how their content appears in search results and how to optimize visibility.

Google’s Search Console provides a suite of tools that offer insights into a website’s indexing status. Webmasters should regularly review the Index Coverage report. It details pages that have been successfully indexed, warnings about potential issues, and reasons why certain URLs haven’t been indexed. This data is essential to diagnose and resolve problems that could affect search visibility.

To keep tabs on which URLs have been indexed, a webmaster can use the URL Inspection tool within Search Console. This tool allows for real-time checking of a page’s indexing status. Furthermore, APIs, such as the Google Indexing API, facilitate the automation of this process for large websites, immediately updating Google when URLs are added or changed, which is particularly useful for pages with time-sensitive content. However, it’s important to note that this API is primarily intended for JobPosting or LiveStream structured data. Tracking indexed URLs enables webmasters to ensure that their latest and most relevant pages are available to searchers.

For any website, visibility on Google is vital for attracting more visitors. A website can rank faster in search engine results by using targeted SEO strategies and optimizing for organic traffic.

Effective search engine optimization (SEO) is critical for improving a site’s indexing. One must utilize a direct method, such as integrating with the Google Indexing API, especially for content types like JobPosting or events within a VideoObject. This integration notifies Google of new or removed pages, enabling a swift crawl and potentially improving rankings.

In addition, consistent, high-quality content updates signal to search engines that the website is a valuable resource. The inclusion of relevant keywords, structured data, and meta tags enhance crawlability. Websites should also ensure that their sitemaps are up-to-date and submitted to Google Search Console.

To boost organic traffic, one must focus on creating content that resonates with their audience and provides real value. Comprehensive articles, infographics, and interactive media can engage users and increase the time they spend on a site. This is a positive signal to search engines.

Building a network of backlinks from reputable sources is also a pillar of increasing visibility. They serve as endorsements from other sites, suggesting that the content is trustworthy and valuable.

Through strategic SEO practices and a focus on valuable content creation, websites can improve their visibility and organic reach.

When addressing issues with the Google Indexing API, it is crucial to pinpoint specific problems Googlebot may encounter. This can involve soft 404 errors or hurdles in the crawling process. Evaluation and resolution of these errors are essential for maintaining site visibility in Google search results.

Soft 404 errors occur when a page appears to be absent, but the server does not return an actual 404 HTTP status code. This can mislead Googlebot, resulting in poor indexing. To resolve soft 404 errors, one must:

Further guidance for soft 404 issues can be found in this comprehensive article on common Google indexing issues.

Crawling errors can prevent Googlebot from accessing content, thus hindering the indexing process. Key steps in resolving crawling errors include:

For technical details on dealing with crawl errors, consider the official Google Developers documentation on Indexing API errors.

In the realm of search engine optimization, using the Google Indexing API is a strategic move. It can position a website favorably against competitors. It’s crucial they understand not just the tool itself, but also the broader landscape of search engine behaviors and ranking mechanisms.

Google, and other search engines alike, continually update their algorithms to refine and improve how they rank web pages. Keeping abreast of these changes is vital. When a search engine like Google releases an update, the way it interprets, indexes, and renders content can shift dramatically. Webmasters and SEO specialists should prioritize being informed about these updates because even the smallest change can impact a web page’s visibility. Employing tools like the Indexing API, they can nudge Google to recrawl and reindex pages, ensuring that important content remains prominent after every update.

Adaptation is key to maintaining and improving ranking positions. Search engines like Google roll out changes. For instance, if a competitor’s site is momentarily deindexed or falls in ranking due to an update, it presents an opportunity. By reacting swiftly, for example, submitting important URLs through the Google Indexing API, a site can potentially climb in rankings and gain a temporary edge—a technique underscored by Rank Math’s advice on the subject. This tactic is especially potent for time-sensitive content. Being indexed first can mean capturing market attention.

Using the Google Indexing API should be part of a comprehensive strategy to stay ahead in the competition. It’s not just about reacting to changes, but anticipating them and staying informed that ultimately dictates who leads and who follows in the world of search engines.

The Google Indexing API serves as a tool for website owners to promptly inform Google about changes to their site content. It provides direct access for crawling and indexing updates. This can correlate with user traffic quality improvements.

The purpose of the Google Indexing API is to allow site owners to notify Google directly when pages are added or removed, leading to a fresh crawl and potentially higher user traffic. It’s particularly useful for pages that include JobPosting or BroadcastEvent structured data inside a VideoObject.

To integrate the Google Indexing API with a WordPress site, site owners can use tools such as the Instant Indexing for Google plugin. This plugin facilitates the indexing process directly from the WordPress dashboard.

Google imposes certain quotas and limitations on the number of requests that can be submitted through the Indexing API. It is essential for developers to understand these restrictions to prevent exceeding the maximum allowable requests.

You can find a detailed, step-by-step guide for submitting URLs in Google’s own documentation. The guide covers building, testing, and releasing structured data for the web. It explains how to insert the necessary properties and submit URLs via the API.

One can obtain an API key for the Indexing API through the Google Cloud Console. After securing the key, it must be used with every request to authenticate and authorize the interaction with the API.

The Google Indexing API supports submitting individual URLs. However, it currently does not support batch requests. Each URL needing indexing or removal must be submitted in a separate API request.